2A.eco - Traitement automatique de la langue en Python - correction#

Links: notebook, html, python, slides, GitHub

Correction d’exercices liés au traitement automatique du langage naturel.

from jyquickhelper import add_notebook_menu

add_notebook_menu()

On télécharge les données textuelles nécessaires pour le package nltk.

import nltk

nltk.download('stopwords')

[nltk_data] Downloading package stopwords to [nltk_data] C:UsersxavieAppDataRoamingnltk_data... [nltk_data] Package stopwords is already up-to-date!

True

Exercice 1#

corpus = {

'a' : "Mr. Green killed Colonel Mustard in the study with the candlestick. "

"Mr. Green is not a very nice fellow.",

'b' : "Professor Plum has a green plant in his study.",

'c' : "Miss Scarlett watered Professor Plum's green plant while he was away "

"from his office last week."

}

terms = {

'a' : [ i.lower() for i in corpus['a'].split() ],

'b' : [ i.lower() for i in corpus['b'].split() ],

'c' : [ i.lower() for i in corpus['c'].split() ]

}

from math import log

QUERY_TERMS = ['green', 'plant']

def tf(term, doc, normalize=True):

doc = doc.lower().split()

if normalize:

return doc.count(term.lower()) / float(len(doc))

else:

return doc.count(term.lower()) / 1.0

def idf(term, corpus):

num_texts_with_term = len([True for text in corpus if term.lower()

in text.lower().split()])

try:

return 1.0 + log(float(len(corpus)) / num_texts_with_term)

except ZeroDivisionError:

return 1.0

def tf_idf(term, doc, corpus):

return tf(term, doc) * idf(term, corpus)

query_scores = {'a': 0, 'b': 0, 'c': 0}

for term in [t.lower() for t in QUERY_TERMS]:

for doc in sorted(corpus):

score = tf_idf(term, corpus[doc], corpus.values())

query_scores[doc] += score

print("Score TF-IDF total pour le terme '{}'".format(' '.join(QUERY_TERMS), ))

for (doc, score) in sorted(query_scores.items()):

print(doc, score)

Score TF-IDF total pour le terme 'green plant'

a 0.10526315789473684

b 0.26727390090090714

c 0.1503415692567603

Deux documents possibles : b ou c (a ne contient pas le mot “plant”). B est plus court : donc green plant “pèse” plus.

QUERY_TERMS = ['plant', 'green']

query_scores = {'a': 0, 'b': 0, 'c': 0}

for term in [t.lower() for t in QUERY_TERMS]:

for doc in sorted(corpus):

score = tf_idf(term, corpus[doc], corpus.values())

query_scores[doc] += score

print("Score TF-IDF total pour le terme '{}'".format(' '.join(QUERY_TERMS), ))

for (doc, score) in sorted(query_scores.items()):

print(doc, score)

Score TF-IDF total pour le terme 'plant green'

a 0.10526315789473684

b 0.26727390090090714

c 0.1503415692567603

Le score TF-IDF ne tient pas compte de l’ordre des mots. Approche “bag of words”.

QUERY_TERMS = ['green']

term = [t.lower() for t in QUERY_TERMS]

term = 'green'

query_scores = {'a': 0, 'b': 0, 'c': 0}

for doc in sorted(corpus):

score = tf_idf(term, corpus[doc], corpus.values())

query_scores[doc] += score

print("Score TF-IDF total pour le terme '{}'".format(term))

for (doc, score) in sorted(query_scores.items()):

print(doc, score)

Score TF-IDF total pour le terme 'green'

a 0.10526315789473684

b 0.1111111111111111

c 0.0625

len(corpus['b'])/len(corpus['a'])

0.4423076923076923

Scores proches entre a et b. a contient deux fois ‘green’, mais b est plus de deux fois plus court, donc le score est plus élevé. Il existe d’autres variantes de tf-idf. Il faut choisir celui qui correspond le mieux à vos besoins.

Exercice 2#

Elections américaines#

import json

import nltk

USER_ID = '107033731246200681024'

with open('./ressources_googleplus/' + USER_ID + '.json', 'r') as f:

activity_results=json.load(f)

all_content = " ".join([ a['object']['content'] for a in activity_results ])

tokens = all_content.split()

text = nltk.Text(tokens)

text.concordance('Hillary')

Displaying 2 of 2 matches:

fund a Get Out The Vote effort for Hillary in Pennsylvania. There's a transit

will pay for rides to the polls for Hillary voters via Uber and Lyft. I just su

text.concordance('Trump')

Displaying 3 of 3 matches:

is made me laugh out loud. One thing Trump has been good for is the rise of col

, its funding is under attack by the Trump administration. This is the infrastr

g." I dreamed last night that Donald Trump was taking people on a tour through

text.concordance('vote')

Displaying 7 of 7 matches:

the first time bucked management to vote in favor of a climate-risk resolutio

Boot Camp on the way in. My Ride To Vote has created a crowdfunding campaign

nding campaign to fund a Get Out The Vote effort for Hillary in Pennsylvania.

didates and which contacts might not vote on election day. Next, we provide yo

y http://oreil.ly/2f54ypw Start-Up & Vote is a movement to encourage tech comm

ent to encourage tech communities to vote early and vote together. Get a group

e tech communities to vote early and vote together. Get a group together for y

text.concordance('politics')

Displaying 3 of 3 matches:

ext for the current state of world politics and what I've been calling the #WT

ge the way you think about today's politics as well. I am so glad that Russ Ro

pears to be a deeper dive into the politics of top billionaires. In this time

fdist = text.vocab()

fdist['Hillary'], fdist['Trump'], fdist['vote'], fdist['politics']

(2, 3, 4, 3)

Loi Zipf#

%matplotlib inline

fdist = text.vocab()

no_stopwords = [(k,v) for (k,v) in fdist.items() if k.lower() \

not in nltk.corpus.stopwords.words('english')]

#nltk a été porté en python récemment, quelques fonctionnalités se sont perdues

#(par exemple, Freq Dist n'est pas toujours ordonné par ordre décroissant)

#fdist_no_stopwords = nltk.FreqDist(no_stopwords)

#fdist_no_stopwords.plot(100, cumulative = True)

#le plus rapide : passer par pandas

import pandas as p

df_nostopwords = p.Series(dict(no_stopwords))

df_nostopwords.sort_values(ascending=False)

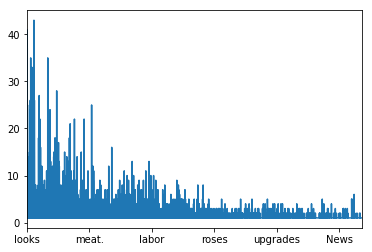

df_nostopwords.plot();

import matplotlib.pyplot as plt

df_nostopwords=p.Series(dict(no_stopwords))

df_nostopwords.sort_values(ascending=False)

df_nostopwords=p.DataFrame(df_nostopwords)

df_nostopwords.rename(columns={0:'count'},inplace=True)

df_nostopwords['one']=1

df_nostopwords['rank']=df_nostopwords['one'].cumsum()

df_nostopwords['zipf_law']=df_nostopwords['count'].iloc[0]/df_nostopwords['rank']

df_nostopwords=df_nostopwords[1:]

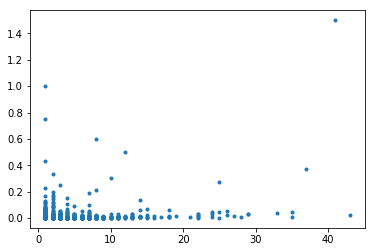

plt.plot(df_nostopwords['count'],df_nostopwords['zipf_law'], '.');

df = p.Series(fdist)

df.sort_values(ascending=False)

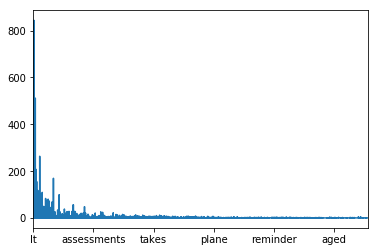

df.plot();

df = p.Series(fdist)

df.sort_values(ascending=False)

df=p.DataFrame(df)

df.rename(columns={0:'count'},inplace=True)

df['one']=1

df['rank']=df['one'].cumsum()

df['zipf_law']=df['count'].iloc[0]/df['rank']

df=df[1:]

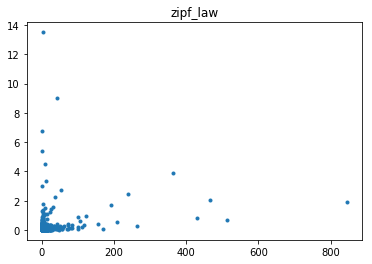

fig, ax = plt.subplots(1, 1)

ax.plot(df['count'], df['zipf_law'], '.')

ax.set_title("zipf_law");

Diversité du vocabulaire#

def lexical_diversity(token_list):

return len(token_list) / len(set(token_list))

USER_ID = '107033731246200681024'

with open('./ressources_googleplus/' + USER_ID + '.json', 'r') as f:

activity_results=json.load(f)

all_content = " ".join([ a['object']['content'] for a in activity_results ])

tokens = all_content.split()

text = nltk.Text(tokens)

lexical_diversity(tokens)

3.075705808307858

Exercice 3#

3-1 Autres termes de recherche#

import json

import nltk

path = 'ressources_googleplus/107033731246200681024.json'

text_data = json.loads(open(path).read())

QUERY_TERMS = ['open','data']

activities = [activity['object']['content'].lower().split() \

for activity in text_data \

if activity['object']['content'] != ""]

# Le package TextCollection contient un module tf-idf

tc = nltk.TextCollection(activities)

relevant_activities = []

for idx in range(len(activities)):

score = 0

for term in [t.lower() for t in QUERY_TERMS]:

score += tc.tf_idf(term, activities[idx])

if score > 0:

relevant_activities.append({'score': score, 'title': text_data[idx]['title'],

'url': text_data[idx]['url']})

# Tri par score et présentation des résultats

relevant_activities = sorted(relevant_activities,

key=lambda p: p['score'], reverse=True)

c=0

for activity in relevant_activities:

if c < 6:

print(activity['title'])

print('\tLink: {}'.format(activity['url']))

print('\tScore: {}'.format(activity['score']))

c+=1

This is a really important piece about open data and platforms.

Link: https://plus.google.com/+TimOReilly/posts/fo9uxWTctHb

Score: 0.5498599632119789

I love new sources of trend data about technology adoption. We've used variations of this for years ...

Link: https://plus.google.com/+TimOReilly/posts/FetXVRJeJFv

Score: 0.17368671875174563

If you love Hamilton, as I do, and you're interested in data visualization, you'll find this fascinating...

Link: https://plus.google.com/+TimOReilly/posts/NNsiSo8K7B7

Score: 0.16687547487912816

Data can play a great role in advancing sustainability. I'm quoted in this short video from Planet Labs...

Link: https://plus.google.com/+TimOReilly/posts/45KX41Q2LN4

Score: 0.15760461516362104

Mark Cuban's tweet about data science in the NBA, featuring the image of his screen and an O'Reilly ...

Link: https://plus.google.com/+TimOReilly/posts/2hCQhfTaX5g

Score: 0.14184415364725894

An excellent demonstration of why Open Access lowers the barriers to knowledge-sharing in science. This...

Link: https://plus.google.com/+TimOReilly/posts/iQ4RdspWxbY

Score: 0.13381568843277453

3-2 Autres métriques de distance#

from math import log

def tf_binary(term, doc):

doc_l = [d.lower() for d in doc]

if term.lower() in doc:

return 1.0

else:

return 0.0

def tf_rawfreq(term, doc):

doc_l = [d.lower() for d in doc]

return doc_l.count(term.lower())

def tf_lognorm(term,doc):

doc_l = [d.lower() for d in doc]

if doc_l.count(term.lower()) > 0:

return 1.0 + log(doc_l.count(term.lower()))

else:

return 1.0

def idf(term,corpus):

num_texts_with_term = len([True for text in corpus\

if term.lower() in text])

try:

return log(float(len(corpus) / num_texts_with_term))

except ZeroDivisionError:

return 1.0

def idf_init(term, corpus):

num_texts_with_term = len([True for text in corpus\

if term.lower() in text])

try:

return 1.0 + log(float(len(corpus)) / num_texts_with_term)

except ZeroDivisionError:

return 1.0

def idf_smooth(term,corpus):

num_texts_with_term = len([True for text in corpus\

if term.lower() in text])

try:

return log(1.0 + float(len(corpus) / num_texts_with_term))

except ZeroDivisionError:

return 1.0

def tf_idf0(term, doc, corpus):

return tf_binary(term, doc) * idf(term, corpus)

def tf_idf1(term, doc, corpus):

return tf_rawfreq(term, doc) * idf(term, corpus)

def tf_idf2(term, doc, corpus):

return tf_lognorm(term, doc) * idf(term, corpus)

def tf_idf3(term, doc, corpus):

return tf_rawfreq(term, doc) * idf_init(term, corpus)

def tf_idf4(term, doc, corpus):

return tf_lognorm(term, doc) * idf_init(term, corpus)

def tf_idf5(term, doc, corpus):

return tf_rawfreq(term, doc) * idf_smooth(term, corpus)

def tf_idf6(term, doc, corpus):

return tf_lognorm(term, doc) * idf_smooth(term, corpus)

import json

import nltk

path = 'ressources_googleplus/107033731246200681024.json'

text_data = json.loads(open(path).read())

QUERY_TERMS = ['open','data']

activities = [activity['object']['content'].lower().split() \

for activity in text_data \

if activity['object']['content'] != ""]

relevant_activities = []

for idx in range(len(activities)):

score = 0

for term in [t.lower() for t in QUERY_TERMS]:

score += tf_idf1(term, activities[idx],activities)

if score > 0:

relevant_activities.append({'score': score, 'title': text_data[idx]['title'],

'url': text_data[idx]['url']})

# Tri par score et présentation des résultats

relevant_activities = sorted(relevant_activities,

key=lambda p: p['score'], reverse=True)

c=0

for activity in relevant_activities:

if c < 6:

print(activity['title'])

print('\tLink: {}'.format(activity['url']))

print('\tScore: {}'.format(activity['score']))

c+=1

The 10-year contract for the US recreation.gov site is up for renewal, and the Department of the Interior...

Link: https://plus.google.com/+TimOReilly/posts/cmjFvKC5S9v

Score: 23.81914493188566

Can We Use Data to Make Better Regulations?

Evgeny Morozov either misunderstands or misrepresents the...

Link: https://plus.google.com/+TimOReilly/posts/gboAUahQwuZ

Score: 11.347532291780714

I love new sources of trend data about technology adoption. We've used variations of this for years ...

Link: https://plus.google.com/+TimOReilly/posts/FetXVRJeJFv

Score: 8.510649218835535

Mark Cuban's tweet about data science in the NBA, featuring the image of his screen and an O'Reilly ...

Link: https://plus.google.com/+TimOReilly/posts/2hCQhfTaX5g

Score: 8.510649218835535

The title of this piece doesn't do it justice. The description does better: "This talk discusses how...

Link: https://plus.google.com/+TimOReilly/posts/YjzTq5x45MC

Score: 8.510649218835535

I'm doing a ProductHunt AMA at 9 am PT this morning. I love getting people thinking harder about how...

Link: https://plus.google.com/+TimOReilly/posts/KFxXr6qTEHS

Score: 6.048459595331767

Pensez-vous que pour notre cas la fonction tf_binary est justifiée ?

Exercice 4#

import json

import nltk

path = 'ressources_googleplus/107033731246200681024.json'

data = json.loads(open(path).read())

# Sélection des textes qui ont plus de 1000 mots

data = [ post for post in json.loads(open(path).read()) \

if len(post['object']['content']) > 1000 ]

all_posts = [post['object']['content'].lower().split()

for post in data ]

tc = nltk.TextCollection(all_posts)

# Calcul d'une matrice terme de recherche x document

# Renvoie un score tf-idf pour le terme dans le document

td_matrix = {}

for idx in range(len(all_posts)):

post = all_posts[idx]

fdist = nltk.FreqDist(post)

doc_title = data[idx]['title']

url = data[idx]['url']

td_matrix[(doc_title, url)] = {}

for term in fdist.keys():

td_matrix[(doc_title, url)][term] = tc.tf_idf(term, post)

distances = {}

for (title1, url1) in td_matrix.keys():

distances[(title1, url1)] = {}

(min_dist, most_similar) = (1.0, ('', ''))

for (title2, url2) in td_matrix.keys():

#copie des valeurs (un dictionnaire étant mutable)

terms1 = td_matrix[(title1, url1)].copy()

terms2 = td_matrix[(title2, url2)].copy()

#on complete les gaps pour avoir des vecteurs de même longueur

for term1 in terms1:

if term1 not in terms2:

terms2[term1] = 0

for term2 in terms2:

if term2 not in terms1:

terms1[term2] = 0

#on créé des vecteurs de score pour l'ensemble des terms de chaque document

v1 = [score for (term, score) in sorted(terms1.items())]

v2 = [score for (term, score) in sorted(terms2.items())]

#calcul des similarité entre documents : distance cosine entre les deux vecteurs de scores tf-idf

distances[(title1, url1)][(title2, url2)] = \

nltk.cluster.util.cosine_distance(v1, v2)

import pandas as p

df = p.DataFrame(distances)

df.index = df.index.droplevel(0)

df.iloc[:3,:3]

| From an article about Walmart, their move to pay more, and the lessons for the broader economy: http... | Nassau, The Bahamas Airport Travel Advice\n\nIf anyone happens to travel to Nassau, the Bahamas, I thought... | Amazing story about digital transformation http://www.codeforamerica.org/blog/2015/11/30/a-new-approach... | |

|---|---|---|---|

| https://plus.google.com/+TimOReilly/posts/bqErtyYp6co | https://plus.google.com/+TimOReilly/posts/dpQDew7sPbu | https://plus.google.com/+TimOReilly/posts/BRmKh2ycaPe | |

| https://plus.google.com/+TimOReilly/posts/1Lcxb3b8VPH | 0.941522 | 0.984552 | 0.965728 |

| https://plus.google.com/+TimOReilly/posts/7EaHeYc1BiB | 0.969901 | 0.976170 | 0.973205 |

| https://plus.google.com/+TimOReilly/posts/BRmKh2ycaPe | 0.986285 | 0.980943 | 0.000000 |

knn_post7EaHeYc1BiB = df.loc['https://plus.google.com/+TimOReilly/posts/7EaHeYc1BiB']

knn_post7EaHeYc1BiB.sort_values()

#le post [0] est lui-même

knn_post7EaHeYc1BiB[1:6]

Nassau, The Bahamas Airport Travel AdvicennIf anyone happens to travel to Nassau, the Bahamas, I thought... https://plus.google.com/+TimOReilly/posts/dpQDew7sPbu 0.976170 Amazing story about digital transformation http://www.codeforamerica.org/blog/2015/11/30/a-new-approach... https://plus.google.com/+TimOReilly/posts/BRmKh2ycaPe 0.973205 "Surely Democrats and Republicans could agree to cut billions from a failed program like this!" you ... https://plus.google.com/+TimOReilly/posts/1Lcxb3b8VPH 0.983031 How fragile life is, even for the best of us. We heard this morning that our friend Jake Brewer was ... https://plus.google.com/+TimOReilly/posts/jV8jeKeWWyf 0.974682 My dear friend Carolyn Shapiro does amazing projects that help communities understand their history,... https://plus.google.com/+TimOReilly/posts/F1E8rsm3URP 0.994818 Name: https://plus.google.com/+TimOReilly/posts/7EaHeYc1BiB, dtype: float64

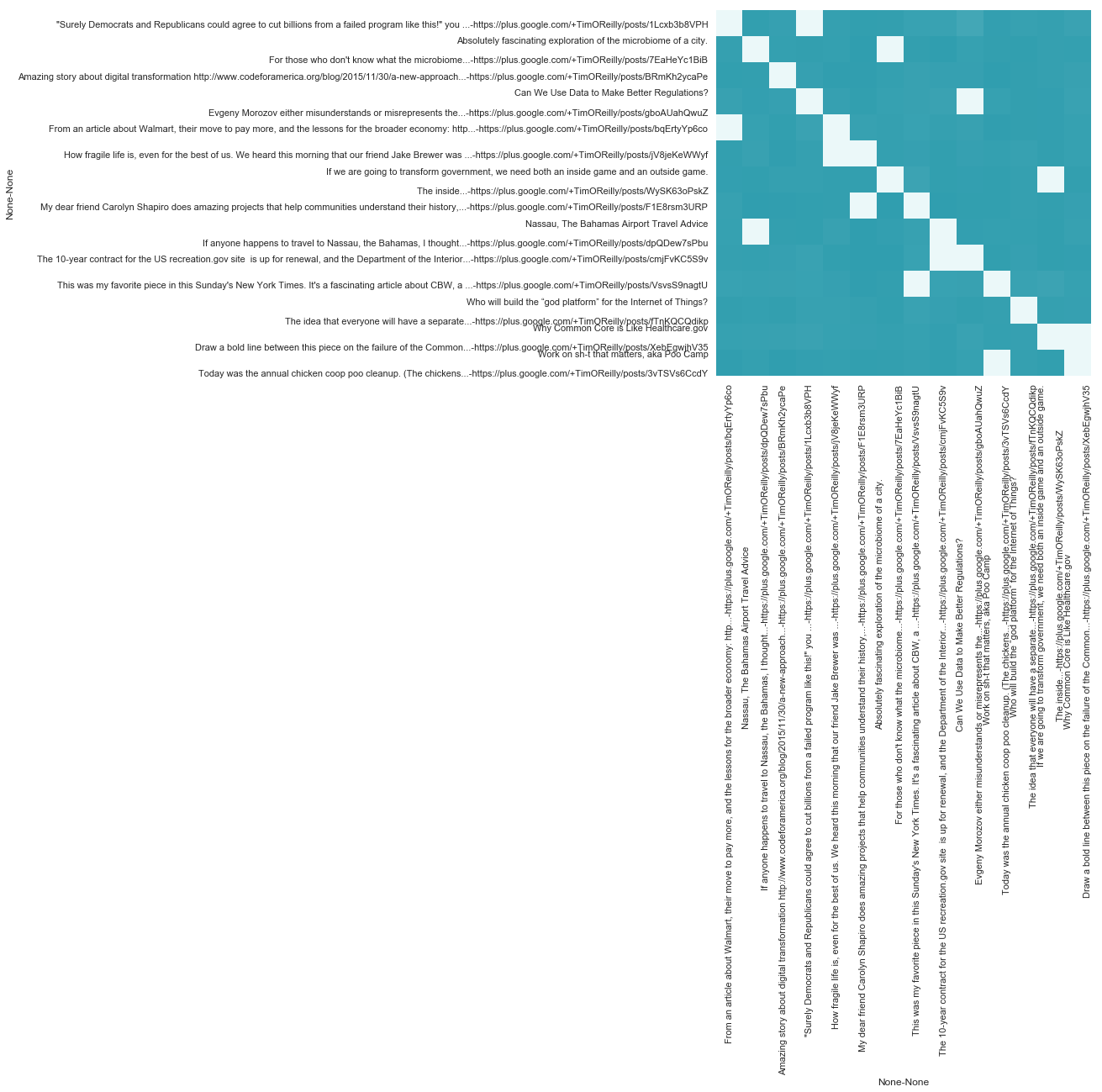

Heatmap#

import pandas as p

import seaborn as sns; sns.set()

import matplotlib.pyplot as plt

fig = plt.figure( figsize=(8,8) )

ax = fig.add_subplot(111)

df = p.DataFrame(distances)

for i in range(len(df)):

df.iloc[i,i]=0

pal = sns.light_palette((210, 90, 60), input="husl",as_cmap=True)

g = sns.heatmap(df, yticklabels=True, xticklabels=True, cbar=False, cmap=pal);

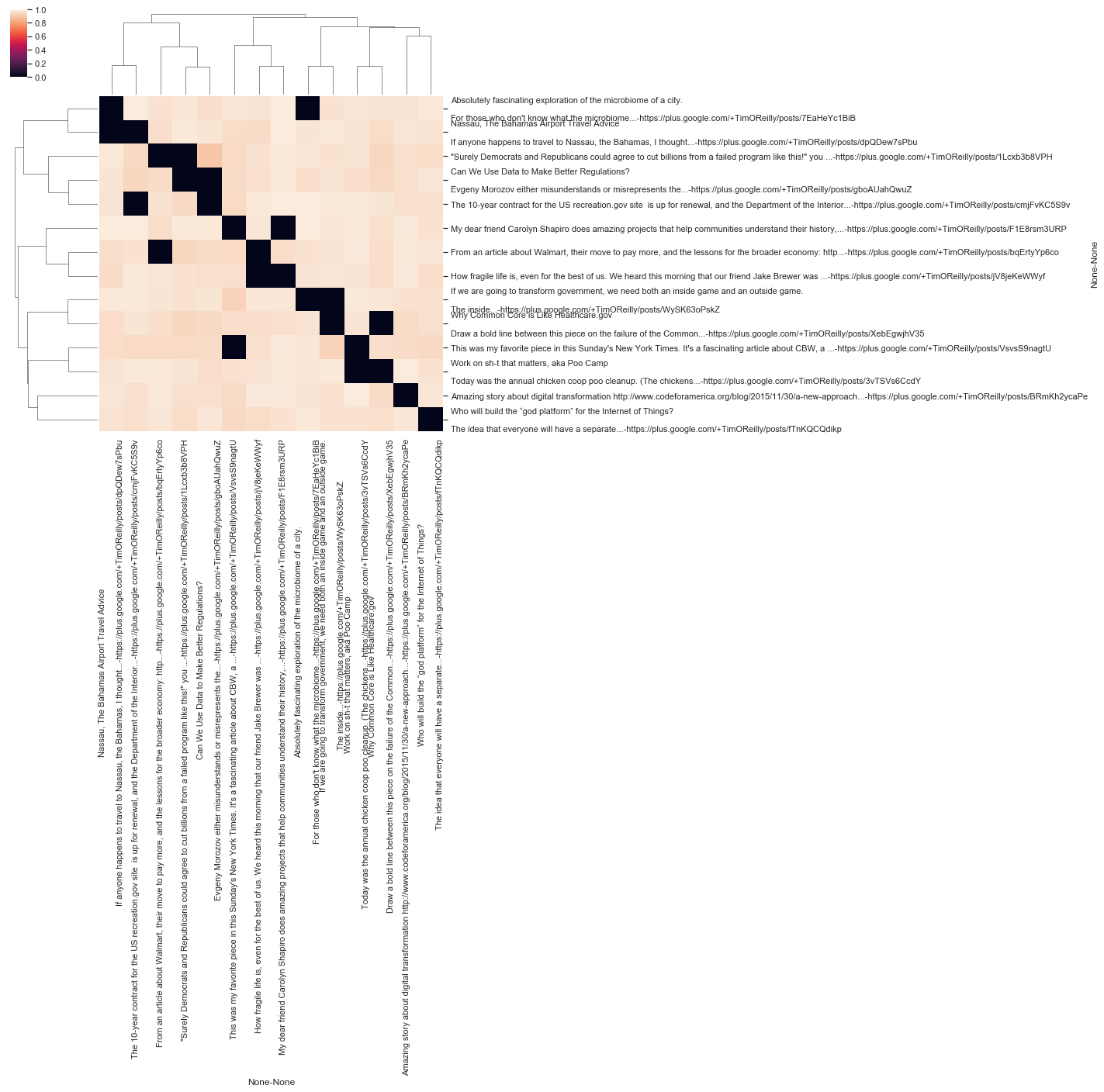

Clustering Hiérarchique#

import scipy.spatial as sp, scipy.cluster.hierarchy as hc

df = p.DataFrame(distances)

for i in range(len(df)):

df.iloc[i,i] = 0

La matrice doit être symmétrique.

mat = df.values

mat = (mat + mat.T) / 2

dist = sp.distance.squareform(mat)

from pkg_resources import parse_version

import scipy

if parse_version(scipy.__version__) <= parse_version('0.17.1'):

# Il peut y avoir quelques soucis avec la méthode Ward

data_link = hc.linkage(dist, method='single')

else:

data_link = hc.linkage(dist, method='ward')

fig = plt.figure( figsize=(8,8) )

g = sns.clustermap(df, row_linkage=data_link, col_linkage=data_link)

# instance de l'objet axes, c'est un peu caché :)

ax = g.ax_heatmap

ax;

<Figure size 576x576 with 0 Axes>

On voit que les documents sont globalement assez différents les uns des autres.

Exercice 5#

Comparaison des différentes fonctions de distances.

import json

import nltk

path = 'ressources_googleplus/107033731246200681024.json'

data = json.loads(open(path).read())

# Nombre de co-occurrences à trouver

N = 25

all_tokens = [token for activity in data for token in \

activity['object']['content'].lower().split()]

finder = nltk.BigramCollocationFinder.from_words(all_tokens)

finder.apply_freq_filter(2)

#filtre des mots trop fréquents

finder.apply_word_filter(lambda w: w in nltk.corpus.stopwords.words('english'))

bim = nltk.collocations.BigramAssocMeasures()

distances_func = [bim.raw_freq, bim.jaccard, bim.dice, bim.student_t, \

bim.chi_sq, bim.likelihood_ratio, bim.pmi]

collocations = {}

collocations_sets = {}

for d in distances_func:

collocations[d] = finder.nbest(d,N)

collocations_sets[d] = set([' '.join(c) for c in collocations[d]])

print('\n')

print(d)

for collocation in collocations[d]:

c = ' '.join(collocation)

print(c)

<function NgramAssocMeasures.raw_freq at 0x000001AB1D070EA0>

o'reilly media

new york

next:economy summit

open data

silicon valley

+jennifer pahlka

common core

data science

real businesses

really great

well worth

bay mini

brett goldstein

cabo pulmo

child welfare

credit card

east bay

government services

granite workers

humble bundle

i'm proud

maker faire

mini maker

never search

next:economy summit.

<bound method NgramAssocMeasures.jaccard of <class 'nltk.metrics.association.BigramAssocMeasures'>>

bottom, “copyright

brett goldstein

cabo pulmo

nbc press:here

nick hanauer

press:here tv

wood fired

yuval noah

silicon valley

+jennifer pahlka

barre historical

computational biologist

mikey dickerson

saul griffith

bay mini

child welfare

credit card

east bay

on-demand economy,

white house

drm-free ebooks

humble bundle

inca trail

italian granite

private sector

<function BigramAssocMeasures.dice at 0x000001AB1D078620>

bottom, “copyright

brett goldstein

cabo pulmo

nbc press:here

nick hanauer

press:here tv

wood fired

yuval noah

silicon valley

+jennifer pahlka

barre historical

computational biologist

mikey dickerson

saul griffith

bay mini

child welfare

credit card

east bay

on-demand economy,

white house

drm-free ebooks

humble bundle

inca trail

italian granite

private sector

<bound method NgramAssocMeasures.student_t of <class 'nltk.metrics.association.BigramAssocMeasures'>>

o'reilly media

silicon valley

next:economy summit

new york

open data

+jennifer pahlka

common core

well worth

real businesses

data science

really great

brett goldstein

cabo pulmo

bay mini

child welfare

east bay

white house

credit card

on-demand economy,

humble bundle

maker faire

mini maker

granite workers

worth reading.

next:economy summit.

<bound method BigramAssocMeasures.chi_sq of <class 'nltk.metrics.association.BigramAssocMeasures'>>

bottom, “copyright

brett goldstein

cabo pulmo

nbc press:here

nick hanauer

press:here tv

wood fired

yuval noah

silicon valley

barre historical

computational biologist

mikey dickerson

saul griffith

+jennifer pahlka

bay mini

child welfare

east bay

white house

credit card

on-demand economy,

drm-free ebooks

weeks ago,

humble bundle

inca trail

italian granite

<bound method NgramAssocMeasures.likelihood_ratio of <class 'nltk.metrics.association.BigramAssocMeasures'>>

silicon valley

+jennifer pahlka

o'reilly media

next:economy summit

common core

new york

brett goldstein

cabo pulmo

well worth

bay mini

child welfare

east bay

white house

credit card

on-demand economy,

maker faire

mini maker

granite workers

open data

humble bundle

worth reading.

real businesses

next:economy summit.

bottom, “copyright

nbc press:here

<bound method NgramAssocMeasures.pmi of <class 'nltk.metrics.association.BigramAssocMeasures'>>

bottom, “copyright

nbc press:here

nick hanauer

press:here tv

wood fired

yuval noah

barre historical

brett goldstein

cabo pulmo

computational biologist

mikey dickerson

saul griffith

drm-free ebooks

weeks ago,

inca trail

italian granite

private sector

value maximization

+bryce roberts

autonomous vehicles

bay mini

bryce roberts

child welfare

east bay

income inequality.

Pour comparer les sets deux à deux, on peut calculer de nouveau une distance de jaccard… des sets de collocations.

for d1 in distances_func:

for d2 in distances_func:

if d1 != d2:

jac = len(collocations_sets[d1].intersection(collocations_sets[d2])) / \

len(collocations_sets[d1].union(collocations_sets[d2]))

if jac > 0.8:

print('Méthode de distances comparables')

print(jac,'\n'+str(d1),'\n'+str(d2))

print('\n')

print('\n')

print('\n')

for d1 in distances_func:

for d2 in distances_func:

if d1 != d2:

jac = len(collocations_sets[d1].intersection(collocations_sets[d2])) / \

len(collocations_sets[d1].union(collocations_sets[d2]))

if jac < 0.2:

print('Méthode de distances avec des résultats très différents')

print(jac,'\n'+str(d1),'\n'+str(d2))

print('\n')

Méthode de distances comparables

1.0

<bound method NgramAssocMeasures.jaccard of <class 'nltk.metrics.association.BigramAssocMeasures'>>

<function BigramAssocMeasures.dice at 0x000001AB1D078620>

Méthode de distances comparables

0.9230769230769231

<bound method NgramAssocMeasures.jaccard of <class 'nltk.metrics.association.BigramAssocMeasures'>>

<bound method BigramAssocMeasures.chi_sq of <class 'nltk.metrics.association.BigramAssocMeasures'>>

Méthode de distances comparables

1.0

<function BigramAssocMeasures.dice at 0x000001AB1D078620>

<bound method NgramAssocMeasures.jaccard of <class 'nltk.metrics.association.BigramAssocMeasures'>>

Méthode de distances comparables

0.9230769230769231

<function BigramAssocMeasures.dice at 0x000001AB1D078620>

<bound method BigramAssocMeasures.chi_sq of <class 'nltk.metrics.association.BigramAssocMeasures'>>

Méthode de distances comparables

0.8518518518518519

<bound method NgramAssocMeasures.student_t of <class 'nltk.metrics.association.BigramAssocMeasures'>>

<bound method NgramAssocMeasures.likelihood_ratio of <class 'nltk.metrics.association.BigramAssocMeasures'>>

Méthode de distances comparables

0.9230769230769231

<bound method BigramAssocMeasures.chi_sq of <class 'nltk.metrics.association.BigramAssocMeasures'>>

<bound method NgramAssocMeasures.jaccard of <class 'nltk.metrics.association.BigramAssocMeasures'>>

Méthode de distances comparables

0.9230769230769231

<bound method BigramAssocMeasures.chi_sq of <class 'nltk.metrics.association.BigramAssocMeasures'>>

<function BigramAssocMeasures.dice at 0x000001AB1D078620>

Méthode de distances comparables

0.8518518518518519

<bound method NgramAssocMeasures.likelihood_ratio of <class 'nltk.metrics.association.BigramAssocMeasures'>>

<bound method NgramAssocMeasures.student_t of <class 'nltk.metrics.association.BigramAssocMeasures'>>

Méthode de distances avec des résultats très différents

0.1111111111111111

<function NgramAssocMeasures.raw_freq at 0x000001AB1D070EA0>

<bound method NgramAssocMeasures.pmi of <class 'nltk.metrics.association.BigramAssocMeasures'>>

Méthode de distances avec des résultats très différents

0.1111111111111111

<bound method NgramAssocMeasures.student_t of <class 'nltk.metrics.association.BigramAssocMeasures'>>

<bound method NgramAssocMeasures.pmi of <class 'nltk.metrics.association.BigramAssocMeasures'>>

Méthode de distances avec des résultats très différents

0.16279069767441862

<bound method NgramAssocMeasures.likelihood_ratio of <class 'nltk.metrics.association.BigramAssocMeasures'>>

<bound method NgramAssocMeasures.pmi of <class 'nltk.metrics.association.BigramAssocMeasures'>>

Méthode de distances avec des résultats très différents

0.1111111111111111

<bound method NgramAssocMeasures.pmi of <class 'nltk.metrics.association.BigramAssocMeasures'>>

<function NgramAssocMeasures.raw_freq at 0x000001AB1D070EA0>

Méthode de distances avec des résultats très différents

0.1111111111111111

<bound method NgramAssocMeasures.pmi of <class 'nltk.metrics.association.BigramAssocMeasures'>>

<bound method NgramAssocMeasures.student_t of <class 'nltk.metrics.association.BigramAssocMeasures'>>

Méthode de distances avec des résultats très différents

0.16279069767441862

<bound method NgramAssocMeasures.pmi of <class 'nltk.metrics.association.BigramAssocMeasures'>>

<bound method NgramAssocMeasures.likelihood_ratio of <class 'nltk.metrics.association.BigramAssocMeasures'>>

import json

import nltk

path = 'ressources_googleplus/107033731246200681024.json'

data = json.loads(open(path).read())

# Nombre de co-occurrences à trouver

N = 25

all_tokens = [token for activity in data for token in \

activity['object']['content'].lower().split()]

finder = nltk.TrigramCollocationFinder.from_words(all_tokens)

finder.apply_freq_filter(2)

#filtre des mots trop fréquents

finder.apply_word_filter(lambda w: w in nltk.corpus.stopwords.words('english'))

trigram_measures = nltk.collocations.TrigramAssocMeasures()

collocations = finder.nbest(trigram_measures.jaccard, N)

for collocation in collocations:

c = ' '.join(collocation)

print(c)

nbc press:here tv

east bay mini

cabo pulmo sunrise

bay mini maker

barre historical society

mini maker faire

press:here tv interview

well worth reading.

child welfare system

italian granite workers

open source software

abc world news

i'm super excited

new york times.

real businesses make