Search images with deep learning (keras)#

Links: notebook, html, PDF, python, slides, GitHub

Images are usually very different if we compare them at pixel level but that’s quite different if we look at them after they were processed by a deep learning model. We convert each image into a feature vector extracted from an intermediate layer of the network.

from jyquickhelper import add_notebook_menu

add_notebook_menu()

%matplotlib inline

Get a pre-trained model#

We choose the model described in paper MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. Pre-trained models are available at deep-learning-models/releases.

from keras.applications.mobilenet import MobileNet

model = MobileNet(input_shape=None, alpha=1.0, depth_multiplier=1,

dropout=1e-3, include_top=True,

weights='imagenet', input_tensor=None,

pooling=None, classes=1000)

model

Using TensorFlow backend.

WARNING:tensorflow:From c:python372_x64libsite-packagestensorflowpythonframeworkop_def_library.py:263: colocate_with (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version. Instructions for updating: Colocations handled automatically by placer. WARNING:tensorflow:From c:python372_x64libsite-packageskerasbackendtensorflow_backend.py:3445: calling dropout (from tensorflow.python.ops.nn_ops) with keep_prob is deprecated and will be removed in a future version. Instructions for updating: Please use rate instead of keep_prob. Rate should be set to rate = 1 - keep_prob.

<keras.engine.training.Model at 0x21037b63b00>

model.name

'mobilenet_1.00_224'

The model is stored here:

import os

os.listdir(os.path.join(os.environ.get('USERPROFILE', os.environ.get('HOME', '.')),

".keras", "models"))

['densenet121_weights_tf_dim_ordering_tf_kernels.h5',

'imagenet_class_index.json',

'mobilenet_1_0_224_tf.h5',

'mobilenet_v2_weights_tf_dim_ordering_tf_kernels_1.0_224.h5']

print(model.summary())

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) (None, 224, 224, 3) 0

_________________________________________________________________

conv1_pad (ZeroPadding2D) (None, 225, 225, 3) 0

_________________________________________________________________

conv1 (Conv2D) (None, 112, 112, 32) 864

_________________________________________________________________

conv1_bn (BatchNormalization (None, 112, 112, 32) 128

_________________________________________________________________

conv1_relu (ReLU) (None, 112, 112, 32) 0

_________________________________________________________________

conv_dw_1 (DepthwiseConv2D) (None, 112, 112, 32) 288

_________________________________________________________________

conv_dw_1_bn (BatchNormaliza (None, 112, 112, 32) 128

_________________________________________________________________

conv_dw_1_relu (ReLU) (None, 112, 112, 32) 0

_________________________________________________________________

conv_pw_1 (Conv2D) (None, 112, 112, 64) 2048

_________________________________________________________________

conv_pw_1_bn (BatchNormaliza (None, 112, 112, 64) 256

_________________________________________________________________

conv_pw_1_relu (ReLU) (None, 112, 112, 64) 0

_________________________________________________________________

conv_pad_2 (ZeroPadding2D) (None, 113, 113, 64) 0

_________________________________________________________________

conv_dw_2 (DepthwiseConv2D) (None, 56, 56, 64) 576

_________________________________________________________________

conv_dw_2_bn (BatchNormaliza (None, 56, 56, 64) 256

_________________________________________________________________

conv_dw_2_relu (ReLU) (None, 56, 56, 64) 0

_________________________________________________________________

conv_pw_2 (Conv2D) (None, 56, 56, 128) 8192

_________________________________________________________________

conv_pw_2_bn (BatchNormaliza (None, 56, 56, 128) 512

_________________________________________________________________

conv_pw_2_relu (ReLU) (None, 56, 56, 128) 0

_________________________________________________________________

conv_dw_3 (DepthwiseConv2D) (None, 56, 56, 128) 1152

_________________________________________________________________

conv_dw_3_bn (BatchNormaliza (None, 56, 56, 128) 512

_________________________________________________________________

conv_dw_3_relu (ReLU) (None, 56, 56, 128) 0

_________________________________________________________________

conv_pw_3 (Conv2D) (None, 56, 56, 128) 16384

_________________________________________________________________

conv_pw_3_bn (BatchNormaliza (None, 56, 56, 128) 512

_________________________________________________________________

conv_pw_3_relu (ReLU) (None, 56, 56, 128) 0

_________________________________________________________________

conv_pad_4 (ZeroPadding2D) (None, 57, 57, 128) 0

_________________________________________________________________

conv_dw_4 (DepthwiseConv2D) (None, 28, 28, 128) 1152

_________________________________________________________________

conv_dw_4_bn (BatchNormaliza (None, 28, 28, 128) 512

_________________________________________________________________

conv_dw_4_relu (ReLU) (None, 28, 28, 128) 0

_________________________________________________________________

conv_pw_4 (Conv2D) (None, 28, 28, 256) 32768

_________________________________________________________________

conv_pw_4_bn (BatchNormaliza (None, 28, 28, 256) 1024

_________________________________________________________________

conv_pw_4_relu (ReLU) (None, 28, 28, 256) 0

_________________________________________________________________

conv_dw_5 (DepthwiseConv2D) (None, 28, 28, 256) 2304

_________________________________________________________________

conv_dw_5_bn (BatchNormaliza (None, 28, 28, 256) 1024

_________________________________________________________________

conv_dw_5_relu (ReLU) (None, 28, 28, 256) 0

_________________________________________________________________

conv_pw_5 (Conv2D) (None, 28, 28, 256) 65536

_________________________________________________________________

conv_pw_5_bn (BatchNormaliza (None, 28, 28, 256) 1024

_________________________________________________________________

conv_pw_5_relu (ReLU) (None, 28, 28, 256) 0

_________________________________________________________________

conv_pad_6 (ZeroPadding2D) (None, 29, 29, 256) 0

_________________________________________________________________

conv_dw_6 (DepthwiseConv2D) (None, 14, 14, 256) 2304

_________________________________________________________________

conv_dw_6_bn (BatchNormaliza (None, 14, 14, 256) 1024

_________________________________________________________________

conv_dw_6_relu (ReLU) (None, 14, 14, 256) 0

_________________________________________________________________

conv_pw_6 (Conv2D) (None, 14, 14, 512) 131072

_________________________________________________________________

conv_pw_6_bn (BatchNormaliza (None, 14, 14, 512) 2048

_________________________________________________________________

conv_pw_6_relu (ReLU) (None, 14, 14, 512) 0

_________________________________________________________________

conv_dw_7 (DepthwiseConv2D) (None, 14, 14, 512) 4608

_________________________________________________________________

conv_dw_7_bn (BatchNormaliza (None, 14, 14, 512) 2048

_________________________________________________________________

conv_dw_7_relu (ReLU) (None, 14, 14, 512) 0

_________________________________________________________________

conv_pw_7 (Conv2D) (None, 14, 14, 512) 262144

_________________________________________________________________

conv_pw_7_bn (BatchNormaliza (None, 14, 14, 512) 2048

_________________________________________________________________

conv_pw_7_relu (ReLU) (None, 14, 14, 512) 0

_________________________________________________________________

conv_dw_8 (DepthwiseConv2D) (None, 14, 14, 512) 4608

_________________________________________________________________

conv_dw_8_bn (BatchNormaliza (None, 14, 14, 512) 2048

_________________________________________________________________

conv_dw_8_relu (ReLU) (None, 14, 14, 512) 0

_________________________________________________________________

conv_pw_8 (Conv2D) (None, 14, 14, 512) 262144

_________________________________________________________________

conv_pw_8_bn (BatchNormaliza (None, 14, 14, 512) 2048

_________________________________________________________________

conv_pw_8_relu (ReLU) (None, 14, 14, 512) 0

_________________________________________________________________

conv_dw_9 (DepthwiseConv2D) (None, 14, 14, 512) 4608

_________________________________________________________________

conv_dw_9_bn (BatchNormaliza (None, 14, 14, 512) 2048

_________________________________________________________________

conv_dw_9_relu (ReLU) (None, 14, 14, 512) 0

_________________________________________________________________

conv_pw_9 (Conv2D) (None, 14, 14, 512) 262144

_________________________________________________________________

conv_pw_9_bn (BatchNormaliza (None, 14, 14, 512) 2048

_________________________________________________________________

conv_pw_9_relu (ReLU) (None, 14, 14, 512) 0

_________________________________________________________________

conv_dw_10 (DepthwiseConv2D) (None, 14, 14, 512) 4608

_________________________________________________________________

conv_dw_10_bn (BatchNormaliz (None, 14, 14, 512) 2048

_________________________________________________________________

conv_dw_10_relu (ReLU) (None, 14, 14, 512) 0

_________________________________________________________________

conv_pw_10 (Conv2D) (None, 14, 14, 512) 262144

_________________________________________________________________

conv_pw_10_bn (BatchNormaliz (None, 14, 14, 512) 2048

_________________________________________________________________

conv_pw_10_relu (ReLU) (None, 14, 14, 512) 0

_________________________________________________________________

conv_dw_11 (DepthwiseConv2D) (None, 14, 14, 512) 4608

_________________________________________________________________

conv_dw_11_bn (BatchNormaliz (None, 14, 14, 512) 2048

_________________________________________________________________

conv_dw_11_relu (ReLU) (None, 14, 14, 512) 0

_________________________________________________________________

conv_pw_11 (Conv2D) (None, 14, 14, 512) 262144

_________________________________________________________________

conv_pw_11_bn (BatchNormaliz (None, 14, 14, 512) 2048

_________________________________________________________________

conv_pw_11_relu (ReLU) (None, 14, 14, 512) 0

_________________________________________________________________

conv_pad_12 (ZeroPadding2D) (None, 15, 15, 512) 0

_________________________________________________________________

conv_dw_12 (DepthwiseConv2D) (None, 7, 7, 512) 4608

_________________________________________________________________

conv_dw_12_bn (BatchNormaliz (None, 7, 7, 512) 2048

_________________________________________________________________

conv_dw_12_relu (ReLU) (None, 7, 7, 512) 0

_________________________________________________________________

conv_pw_12 (Conv2D) (None, 7, 7, 1024) 524288

_________________________________________________________________

conv_pw_12_bn (BatchNormaliz (None, 7, 7, 1024) 4096

_________________________________________________________________

conv_pw_12_relu (ReLU) (None, 7, 7, 1024) 0

_________________________________________________________________

conv_dw_13 (DepthwiseConv2D) (None, 7, 7, 1024) 9216

_________________________________________________________________

conv_dw_13_bn (BatchNormaliz (None, 7, 7, 1024) 4096

_________________________________________________________________

conv_dw_13_relu (ReLU) (None, 7, 7, 1024) 0

_________________________________________________________________

conv_pw_13 (Conv2D) (None, 7, 7, 1024) 1048576

_________________________________________________________________

conv_pw_13_bn (BatchNormaliz (None, 7, 7, 1024) 4096

_________________________________________________________________

conv_pw_13_relu (ReLU) (None, 7, 7, 1024) 0

_________________________________________________________________

global_average_pooling2d_1 ( (None, 1024) 0

_________________________________________________________________

reshape_1 (Reshape) (None, 1, 1, 1024) 0

_________________________________________________________________

dropout (Dropout) (None, 1, 1, 1024) 0

_________________________________________________________________

conv_preds (Conv2D) (None, 1, 1, 1000) 1025000

_________________________________________________________________

act_softmax (Activation) (None, 1, 1, 1000) 0

_________________________________________________________________

reshape_2 (Reshape) (None, 1000) 0

=================================================================

Total params: 4,253,864

Trainable params: 4,231,976

Non-trainable params: 21,888

_________________________________________________________________

None

len(model.layers)

93

Images#

We collect images from pixabay.

Raw images#

from pyquickhelper.filehelper import unzip_files

if not os.path.exists('simages'):

os.mkdir('simages')

files = unzip_files("data/dog-cat-pixabay.zip", where_to="simages")

len(files), files[0]

(31, 'simages\cat-1151519__480.jpg')

from mlinsights.plotting import plot_gallery_images

plot_gallery_images(files[:2]);

from keras.preprocessing.image import array_to_img, img_to_array, load_img

img = load_img('simages/cat-2603300__480.jpg')

x = img_to_array(img)

x.shape

(480, 320, 3)

import matplotlib.pyplot as plt

plt.imshow(x / 255)

plt.axis('off');

keras implements optimized function to load and process images. Below the code with loads the images without modifying them. It creates an iterator which iterates as many times as we want.

params = dict(rescale=1./255)

I suggest trying without the parameter rescale to see the differences.

The neural network expects numbers in [0, 1] not in [0, 255].

from keras.preprocessing.image import ImageDataGenerator

import numpy

augmenting_datagen = ImageDataGenerator(**params)

itim = augmenting_datagen.flow(x[numpy.newaxis, :, :, :])

# zip(range(0,2)) means to stop the loop after 2 iterations

imgs = list(img[0] for i, img in zip(range(0,2), itim))

len(imgs), imgs[0].shape

(2, (480, 320, 3))

plot_gallery_images(imgs);

But you can multiply the images. See ImageDataGenerator parameters to see what kind of modifications is implemented.

augmenting_datagen_2 = ImageDataGenerator(rotation_range=40, channel_shift_range=9, **params)

itim = augmenting_datagen_2.flow(x[numpy.newaxis, :, :, :])

imgs = list(img[0] for i, img in zip(range(0,10), itim))

plot_gallery_images(imgs);

Iterator on images#

We create an iterator, it considers every subfolder of images. We also need to rescale to size (224, 224) which is the size the loaded neural network ingests.

flow = augmenting_datagen.flow_from_directory('.', batch_size=1,

target_size=(224, 224), classes=['simages'])

imgs = list(img[0][0] for i, img in zip(range(0,10), flow))

len(imgs), imgs[0].shape, type(flow)

Found 62 images belonging to 1 classes.

(10,

(224, 224, 3),

keras_preprocessing.image.directory_iterator.DirectoryIterator)

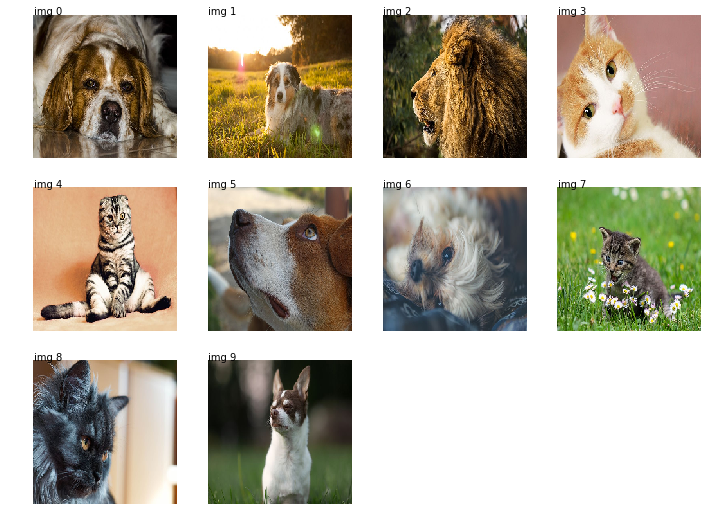

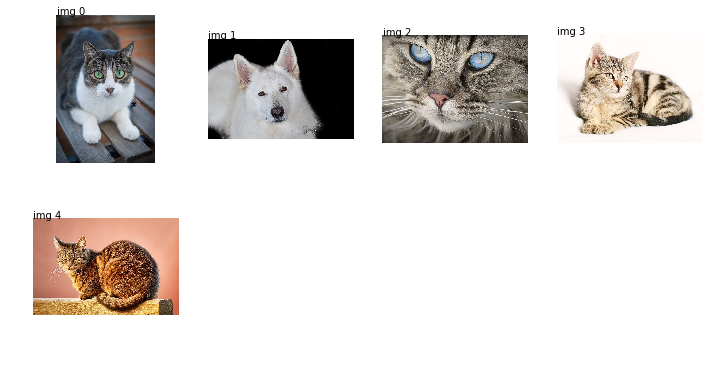

plot_gallery_images(imgs);

How to get the image name?

def get_current_index(flow):

# The iterator is one step ahead.

return (flow.batch_index + flow.n - 1) % flow.n

def get_file_index(flow):

n = get_current_index(flow)

return flow.index_array[n]

flow = augmenting_datagen.flow_from_directory('.', batch_size=1, target_size=(224, 224),

classes=['simages'], shuffle=False)

imgs = list((img[0][0], get_current_index(flow), flow.index_array[get_current_index(flow)],

flow.filenames[get_file_index(flow)]) for i, img in zip(range(0,31), flow))

imgs[0][1:]

Found 62 images belonging to 1 classes.

(0, 0, 'simages\cat-1151519__480.jpg')

imgs[-1][1:]

(30, 30, 'simages\wolf-2865653__480.jpg')

imgs = list((img[0][0], flow.filenames[get_current_index(flow)]) \

for i, img in zip(range(0,10), flow))

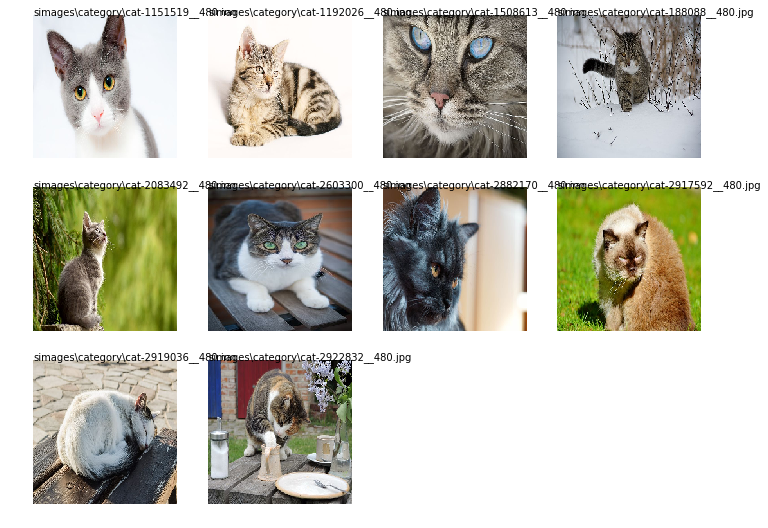

plot_gallery_images([_[0] for _ in imgs], [_[1] for _ in imgs]);

To keep the original order.

flow = augmenting_datagen.flow_from_directory('.', batch_size=1, target_size=(224, 224),

shuffle=False, classes=['simages'])

imgs = list((img[0][0], flow.filenames[get_file_index(flow)]) \

for i, img in zip(range(0,7), flow))

plot_gallery_images([_[0] for _ in imgs], [_[1] for _ in imgs]);

Found 62 images belonging to 1 classes.

len(flow)

62

Search among images#

We use the class SearchEnginePredictionImages.

The idea of the search engine#

The deep network is able to classify images coming from a competition called ImageNet which was trained to classify different images. But still, the network has 88 layers which slightly transform the images into classification results. We assume the last layers contains information which allows the network to classify into objects: it is less related to the images than the content of it. In particular, we would like that an image with a daark background does not necessarily return images with a dark background.

We reshape an image into (224x224) which is the size the network ingests. We propagate the inputs until the layer just before the last one. Its output will be considered as the featurized image. We do that for a specific set of images called the neighbors. When a new image comes up, we apply the same process and find the closest images among the set of neighbors.

model = MobileNet(input_shape=None, alpha=1.0, depth_multiplier=1,

dropout=1e-3, include_top=True,

weights='imagenet', input_tensor=None,

pooling=None, classes=1000)

model

<keras.engine.training.Model at 0x2103ef28c18>

from keras.models import Model

output = model.layers[len(model.layers)-2].output

model = Model(model.input, output)

flow = augmenting_datagen.flow_from_directory('.', batch_size=1, target_size=(224, 224),

classes=['simages'], shuffle=False)

imgs = [img[0][0] for i, img in zip(range(0,31), flow)]

Found 62 images belonging to 1 classes.

outputs = [model.predict(im[numpy.newaxis, :, :, :]) for im in imgs]

all_outputs = numpy.stack([o.ravel() for o in outputs])

We have the features. We build the neighbors.

from sklearn.neighbors import NearestNeighbors

knn = NearestNeighbors()

knn.fit(all_outputs)

NearestNeighbors(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=None, n_neighbors=5, p=2,

radius=1.0)

We extract the neighbors for a new image.

one_image = imgs[5]

one_output = model.predict(one_image[numpy.newaxis, :, :, :])

score, index = knn.kneighbors([one_output.ravel()])

score, index

(array([[0. , 0.22400763, 0.25415188, 0.2831644 , 0.29702211]]),

array([[ 5, 28, 2, 1, 11]], dtype=int64))

We need to retrieve images for indexes stored in index.

import os

names = os.listdir("simages")

names = [os.path.join("simages", n) for n in names]

disp = [names[i] for i in index.ravel()]

disp

['simages\cat-2603300__480.jpg', 'simages\schafer-dog-2669660__480.jpg', 'simages\cat-1508613__480.jpg', 'simages\cat-1192026__480.jpg', 'simages\cat-2946028__480.jpg']

plot_gallery_images(disp);

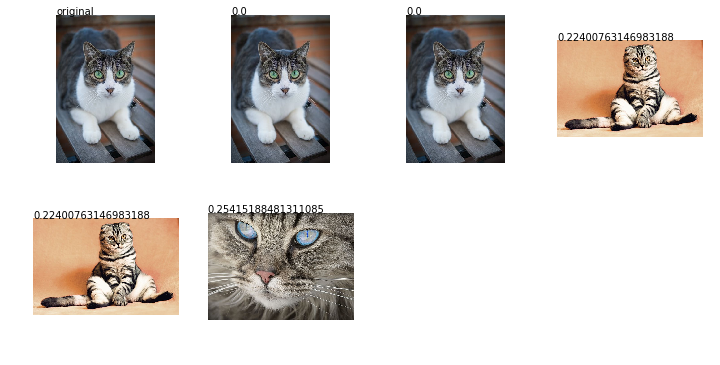

Using one intermediate layer close to the output#

We do the same but with another code implemented in this module which does the same thing.

model = MobileNet(input_shape=None, alpha=1.0, depth_multiplier=1,

dropout=1e-3, include_top=True,

weights='imagenet', input_tensor=None,

pooling=None, classes=1000)

model

<keras.engine.training.Model at 0x2104955f208>

gen = ImageDataGenerator(rescale=1./255)

iterimf = gen.flow_from_directory(".", batch_size=1, target_size=(224, 224),

classes=['simages'], shuffle=False)

Found 62 images belonging to 1 classes.

from mlinsights.search_rank import SearchEnginePredictionImages

se = SearchEnginePredictionImages(model, fct_params=dict(layer=len(model.layers) - 2),

n_neighbors=5)

se.fit(iterimf)

se.features_.shape

(62, 1000)

se.features_.shape

(62, 1000)

list(se.metadata_)[:5]

['i', 'name']

se.metadata_.shape

(62, 2)

Let’s choose one image.

name = se.metadata_.loc[5, "name"]

name

'simages\cat-2603300__480.jpg'

img = load_img(name, target_size=(224, 224))

x = img_to_array(img)

gen = ImageDataGenerator(rescale=1./255)

iterim = gen.flow(x[numpy.newaxis, :, :, :], batch_size=1)

score, ind, meta = se.kneighbors(iterim)

score, ind, meta

(array([0. , 0. , 0.22400763, 0.22400763, 0.25415188]),

array([ 5, 36, 59, 28, 33], dtype=int64),

i name

5 5 simagescat-2603300__480.jpg

36 36 simagescategorycat-2603300__480.jpg

59 59 simagescategoryshotlanskogo-2934720__480.jpg

28 28 simagesshotlanskogo-2934720__480.jpg

33 33 simagescategorycat-1508613__480.jpg)

texts = ['original'] + [str(_) for _ in score]

imgs = [name] + list(meta.name)

plot_gallery_images(imgs, texts);

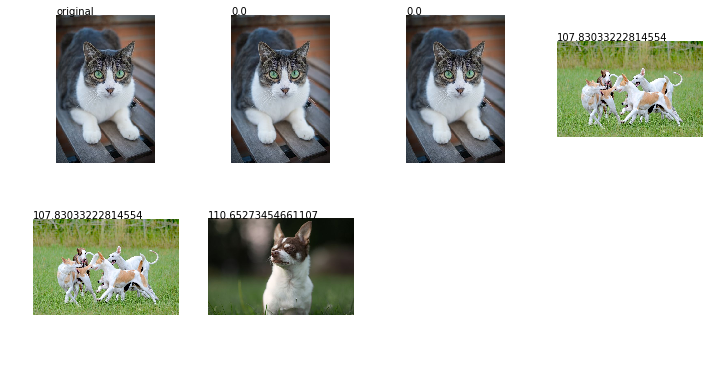

Using one intermediate layer close to the input#

se = SearchEnginePredictionImages(model, fct_params=dict(layer=1), n_neighbors=5)

se.fit(iterimf)

se.features_.shape

(62, 151875)

iterim = gen.flow(x[numpy.newaxis, :, :, :], batch_size=1)

score, ind, meta = se.kneighbors(iterim)

texts = ['original'] + [str(_) for _ in score]

imgs = [name] + list(meta.name)

plot_gallery_images(imgs, texts);

This is worse but expected.

Going further#

The original neural network has not been changed and was chosen to be small (88 layers). Other options are available for better performances. The imported model can be also be trained on a classification problem if there is such information to leverage. Even if the model was trained on millions of images, a couple of thousands are enough to train the last layers. The model can also be trained as long as there exists a way to compute a gradient. We could imagine to label the result of this search engine and train the model on pairs of images ranked in the other.

We can use the pairwise

transform

(example of code:

ranking.py). For every

pair , we tell if the search engine should have

(

) or the order order

(

).

is the features produced by the neural

network :

. We train a classifier on the

database:

A training algorithm based on a gradient will have to propagate the

gradient :

.